For enterprises choosing between third-party SaaS and a private AI, an on-premise solution offers unparalleled security, cost-efficiency, and performance. By leveraging agentic RAG and specialized sLLMs, owning your AI stack becomes a strategic imperative, not just a preference.

In the race to deploy generative AI, enterprises face a critical choice: trust a third-party SaaS provider like ChatGPT with your data and workflows, or build a secure, sovereign AI ecosystem on your own turf? While the simplicity of SaaS is tempting, the long-term advantages of an on-premise solution—especially one featuring agentic RAG and specialized, fine-tuned sLLMs—offer unparalleled security, cost-efficiency, and performance.

For serious enterprise use, owning your AI stack isn't just a preference; it's a strategic imperative. Platforms like Allganize are leading the charge, providing the tools for companies to build and deploy powerful AI agents within their own secure infrastructure. Let's break down why this approach is superior to relying on a generalized SaaS model.

The most significant advantage of an on-premise deployment is data security. When you use a SaaS AI product, you are sending your proprietary, sensitive, and confidential data to a third-party server. This data can include customer information, financial records, trade secrets, and internal communications. Even with contractual assurances, your data is fundamentally outside of your control, creating an inherent security risk and potential compliance nightmare with regulations like GDPR, HIPAA, and CCPA. As detailed in "Top 7 SaaS Security Risks (and How to Fix Them)", relying on third-party vendors introduces risks like cloud misconfigurations and supply chain vulnerabilities.

An on-premise (or private cloud) solution, like the one offered by Allganize, eliminates this risk entirely. Your data never leaves your private cloud or on-premise servers. The entire AI stack—from the models to the applications—operates within your firewall. This "air-gapped" approach is the gold standard for industries where data privacy is non-negotiable, such as finance, healthcare, and legal.

SaaS AI models often operate on a pay-per-use or subscription basis. While this may seem affordable initially, costs can quickly become unpredictable and exorbitant as your usage scales. Every API call, every query, and every document processed adds to your monthly bill. This variable spending makes budgeting difficult and can penalize growth.

In contrast, an on-premise model represents a more predictable, long-term investment. While there are upfront costs for hardware and setup, the total cost of ownership (TCO) is often significantly lower over time. You are not paying per transaction. Instead, you own the infrastructure and can scale your AI operations without incurring exponentially rising fees. This shift from a variable operational expense (OpEx) to a predictable capital expense (CapEx) or a fixed subscription for the on-premise software provides financial stability and control.

Frontier models like GPT-4 are incredibly powerful, but they are generalists. They know a little bit about everything but are not experts in your specific business domain. For enterprise tasks, this can lead to generic, irrelevant, or even inaccurate ("hallucinated") responses.

This is where the power of a fine-tuned Small Language Model (sLLM) comes in. An sLLM is a more compact, efficient model that is specifically trained on your company's data. As explained in a recent Forbes article, sLLMs are highly effective for specialized tasks and can offer significant advantages in speed and cost.

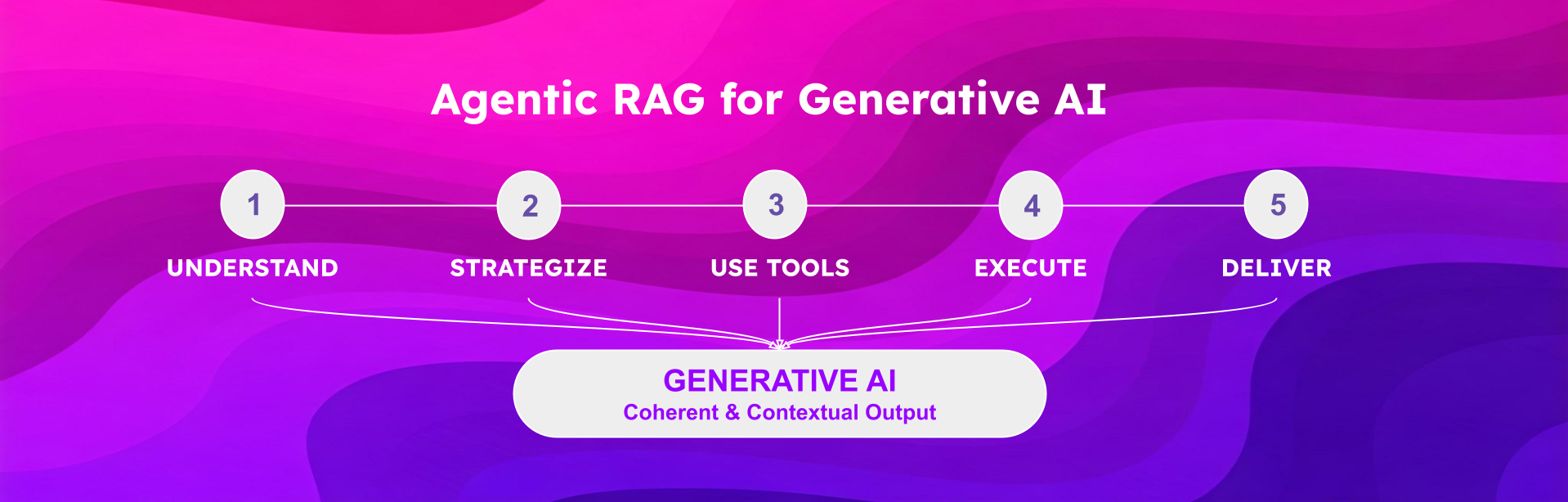

The true power of modern enterprise AI lies in autonomous agents that can execute complex, multi-step tasks. This is where agentic RAG (Retrieval-Augmented Generation) and agent builders shine.

As IBM explains, Agentic RAG enhances traditional RAG by adding a layer of intelligent agents that can reason, plan, and utilize various tools to fulfill complex requests. It's more than just finding a document and summarizing it. It's a sophisticated framework where an AI agent can:

When you combine an agent builder with an on-premise, fine-tuned sLLM, you create a powerhouse. You can build specialized AI agents—like a financial analyst agent that pulls real-time data from internal databases or a compliance agent that monitors internal communications—that operate securely with your most sensitive information. This level of secure, autonomous workflow is simply not possible with a public SaaS tool that lacks deep, secure integration with your internal systems.

While the benefits are clear, building an on-premise AI ecosystem from scratch is complex. This is where a platform like Allganize becomes essential. Allganize provides the complete technology stack—including enterprise-grade agent builders, agentic RAG frameworks, and support for fine-tuned sLLMs—designed for secure, on-premise deployment.

With Allganize, you can:

Don't compromise on security, cost, or performance. While SaaS AI tools have their place, serious enterprises require a solution that puts them in the driver's seat. Owning your AI stack is the future, and on-premise platforms are the key to unlocking it.

Allganize's generative AI platform and fine-tuned sLLMs are optimized for on-premise deployments where security, control and targeted access to local data is key to AI initiative success. To find out if an on-premise or private cloud deployment is best for your project, book an appointment with one of our AI architects.

Lorem ipsum dolor sit amet, consectetur adipiscing Aliquam pellentesque arcu sed felis maximus

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Curabitur maximus quam malesuada est pellentesque rhoncus.

Maecenas et urna purus. Aliquam sagittis diam id semper tristique.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Curabitur maximus quam malesuada est pellentesque rhoncus.

Maecenas et urna purus. Aliquam sagittis diam id semper tristique.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Curabitur maximus quam malesuada est pellentesque rhoncus.

Maecenas et urna purus. Aliquam sagittis diam id semper tristique.

Stay updated with the latest in AI advancements, insights, and stories.